AWS Cloud Resume Challenge:

Building this Website Via the AWS Console

A Little Background

After years of working in the music industry I realized I wanted to work in an environment and industry that was more of a meritocracy. I was searching for a career pivot that would allow me to utilize my love for technical systems work and troubleshooting. I had recently begun learning Python and knew that I wanted to work in the Computer Science space, but was overwhelmed by the amount of avenues and disciplines available. A good friend of mine (and now-mentor) suggested that I give DevOps a look, as that was the field he worked in. When asked what DevOps was and what it did, his response was, if I recall correctly "Kind of a weird black-magic, wear-all-hats, cloud infrastructure management job somewhere between Dev and Admin. We're the glue that holds websites, applications, and software companies together, kind of like a Mastering Engineer (a vital but somewhat mercurial position in music production)." Okay, color me intrigued.

Over the course of the next year or so I committed myself to the process of learning everything I could about the magical and elusive world of DevOps. The constant loop of research, implementation, and troubleshooting grabbed me in a way that nothing had since I first began my training as an Audio Engineer. Every day, week after week, I dedicated any and all time that I could to absorbing as much information about the world of Cloud Infastructure Management, including taking AWS Cloud Practictioner and AWS Certified Solutions Architect - Associate Exam prep courses on Pluralsight. It was at once engaging and frustrating, rewarding and difficult. Eventually I felt confident enough to attempt the Certification Exams. Much to my delight (and dare I say relief), I was able to pass both exams on my first attempts.

After the completion of my exams and with two shiny new certifications in hand, I began searching for projects to actually get my hands on some cloud infrastructure. Gone were the days (temporarily) of watching video lectures and taking fastidious notes for 6-8 hours per day. I wanted to build something. As a wise, if misguided, Jedi once said: This is where the fun begins. While researching what projects would be a good starting point I came across the Cloud Resume Challenge. Perfect.

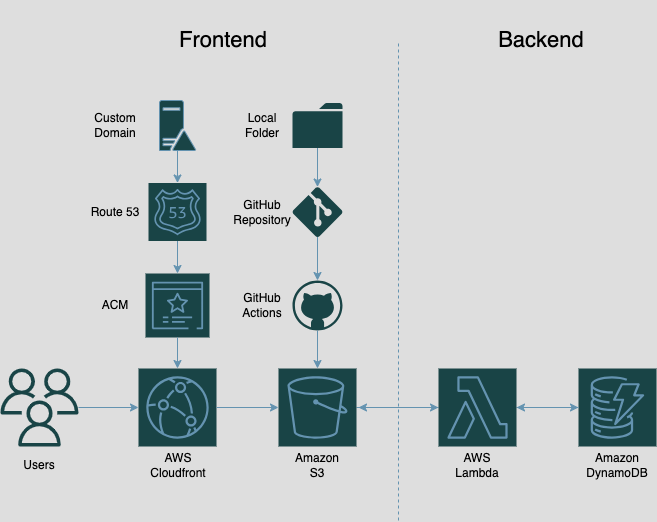

The challenge, in a nutshell, consists of a series of steps to take to host your own website that would house your resume and showcase your portfolio of projects, serving as a flexible guideline to getting your resume website up and running.

The steps are listed as follows:

- Get your AWS Cloud Practitioner Certification

- Write your resume in HTML

- Style your resume with CSS

- Deploy your HTML Resume as an Amazon S3 static website

- Have the website URL use HTTPS for security purposes, and achieve this by using Amazon CloudFront as the CDN

- Point a custom DNS domain name to the CloudFront Distribution. Amazon Route 53 is the recommended DNS provider the challenge lists

- Include a visitor counter that displays how many people have accessed your website, utilizing JavaScript

- Use Amazon DynamoDB to store the number for your visitor counter

- Create an API that accepts requests from your web app and communicates with the database. AWS API Gateway and Lambda are the suggested services here

- Write your Lambda function using Python and the boto3 library for AWS

- Include some tests for your Python code

- Deploy your config using Infrastructure as Code. Although it initially suggests using the AWS SAM template and CLI, it also suggests that Terraform may be a better option, although it is slightly more complicated

- Create a GitHub repository as a means of implimenting source control and CI/CD

- Set up CI/CD for the back end (via GitHub Actions )

- Set up CI/CD for the front end (also via GitHub Actions )

- Write a blog post about the experience

Building The Website

I ended up doing things just a little bit different than the exact guidelines suggested, but I will take you through my process. As I mentioned before, I already had my certification before beginning this project. Step one, complete. Steps 2 & 3: HTML and CSS. I took a Graphic Design class while attending Junior College in my younger years, during which we learned some basics about HTML. That, along with formatting my MySpace page in my youth, were about the extent of my HTML experience. Fortunately I did not find it too difficult to shake the rust off. CSS, however, is a whole different monster altogether. It definitely took me a little time to wrap my head around, and I still would not say I'm confident in CSS by any stretch, but I picked up enough to format this website which, as you can see, functions just fine and I think looks pretty nice. I found a HTML (with CSS styling) template, refactored some things to loosely fit what I was picturing, and moved on to the next steps. I wanted a basic functioning version of the website so that I could have a working page to test my infrastructure on, with the intention of returning to polish it up at the end of the process.

Next up, steps 4-6: S3 static website, HTTPS (via CloudFront), and DNS. Setting up the S3 bucket was easy and straightforward. I should clarify here that at this point I was setting everything up using the AWS console. I wanted to use the most straighforward method when initially setting up in order to confirm my grasp of the basic arcitecture and remove as many variables as possible from this point of the process. After setting up the S3 bucket I uploaded the porject folder to the bucket. After enabling Static Website Hosting on S3 and clicking on the object URL to test things I was given an Access Denied error screen. Makes sense, I disabled public access in the bucket settings. I knew that I could give CloudFront access to the bucket without making the entire bucket public, so I went forward with that. I created the CloudFront Distribution, set the Origin Domain as the S3 bucket, enabled Origin Access Control, enabled the redirect to HTTPS setting, created the distribution, and went back into the CloudFront settings and created then copy/pasted a policy allowing CloudFront access into the S3 bucket policy. When doing research during this phase of the project, I learned that if you are using a CloudFront CDN to serve your S3 static website you do not need to enable static website hosting on the S3 bucket, so I disabled it there. The next step to tackle was DNS. I purchased gjackman.com via Route 53, added "resume.gjackman.com" as a CNAME, requested a public certificate for "*.gjackman.com" (so I could use other subdomains in the future if I so desired), and once the certificate was created, selected said certificate in the CloudFront settings. Pretty smooth sailing so far, my training was serving me well. However, this is where I ran into my first little speed bump. When entering the domain into my browser I was being denied access. It took me a little while to figure out that I had to go back into CloudFront to create A records pointing my domain to my CloudFront distribution. At this stage I was also trying to add a record for "gjackman.com" in addition to "resume.gjackman.com" and "www.gjackman.com", and it was failing. After some moderate frustration and a fair amount of parsing the AWS Documentation and various related Stack Overflow questions, I deduced that I needed to create a certificate that included both "*.gjackman.com" and "gjackman.com" in order for for the DNS validation to succeed. Lesson Learned.

With that sorted it was time to move on to steps 7-10: DynamoDB, Javascript, API (& Lambda), and Python. The DynamoDB database was super simple to set up: I created a table and added "views" as a table item with the value 1. Here is where I diverged from the guidelines a bit. I obviously needed something on the back end to interact with my DynamoDB table, but setting up API Gateway to trigger Lambda seemed to needlessly complicate the arcitecture of the website when I knew for a fact that I could have the Lambda be triggered and interact with the website and the database table without the need for an additional middleman, if you will. So it was that I decided to use a Lambda Function URL in place of API Gateway. I realize that the purpose of including API gateway in the Resume Challenge was likely to add another commonly used resource that people like myself could get their hands on but it felt like a hat on a hat and I could not, in good conscience, allow unneccesary redundancy or clutter in the architecture of the website.K.I.S.S.: Keep it simple, stupid. I created the Lambda function with Python as the Runtime. Even if it had suggested a different language in the Resume Challenge guidelines, I still would have preferred Python as it is the programming language I am most familiar with (and an add/replace function is something I have written in Pyhton before). During the setup of the Lambda function I enabled the Function URL, set up Cross Origin Resource Sharing (or CORS), and whitelisted my domain name in the Lambda function settings. I looked up the proper syntax for retrieving/writing data from and to DynamoDB, and wrote the function (I had a little help here as I looked at various examples across the internet for people who had written similar functions). After this was complete, I added DynamoDB access to the execution role of the Lambda function. I should also note that I declined to do step 11: Python Tests. Writing tests is not something I have experience with and while I am confident I would (and will) be able to do it, I chose to forego this step for the sake of expediency as I already knew that my Python code was solid and my Lambda Function was.. well, functioning.

Here is another point at which I deviated from the original Resume Challenge Guidelines. It suggests moving to IaC at this point, but this did not make much sense to me. I wanted to cement source control and CI/CD before moving into a whole new discipline (that being Terraform), so I decided to skip past IaC for the time being, go on to finish the website, and resolved that when I got it up and running to my satisfaction I would tear the whole thing down and set it up anew using exclusively IaC. Efficient? No, not at all. But it was really important to me that I fully drive home and reinforce what I was learning; adopting a half-completed architecture into Terraform seemed even less efficient from a learning standpoint and I could foresee it adding some unneccesary complications to the process. So, I moved on to steps 13-15: Source Control and CI/CD. I had already had some experience with Git from working with my friends on a few Python projects so I was familiar with the platform already and how to interact with it via the CLI. I set up the repository locally, committed my changes, set up a remote repository on GitHub and added my origin, and Pushed it through. Next: CI/CD with GitHub Actions. This was not something I had done before, but it ended up being fairly straightforward, as these things go. I created the .github/workflows directory and nested a CI/CD .yml file and copy/pasted the Sync S3 Bucket action into it before setting up secrets in Github Actions so as not to hard-code any sensitive information such as the name of my S3 bucket. While the name of the S3 bucket is not really a risk since I only have My CloudFront distribution whitelisted for access, it is still good practice and the knowledge of how to use Secrets would come in handy later when writing my Terraform modules as there is absolutely (relatively) sensitive data contained within that script. When trying to push this through, however, I was getting a permissions error. I went into AWS IAM in the console, as one does when trying to resolve a permissions error, and created a user for GitHub with a permissions policy to grant access to S3. Lo and behold: a success!

Now that I had my fully functioning website it was time to move on to IaC with Terraform. I will tackle describing this process in another post as my process was much more involved and I hit something of a leaning curve. This first phase, however, I found to be (somewhat surprisingly) relatively straightforward. There were definitely moments of confusion and frustration throughout the initial phase of the project but that is to be expected while a) doing something new and b) working with any form of troubleshooting which, I would like to take this opportunity to restate here, is my bread and butter. If you made it this far, thank you for taking the timne to read this. My hopes for this are two-fold: First, I hope that someone who may be struggling with the Cloud Resume Challenge may come across this and perhaps be able to glean some information about common pain points in the process. Secondly, though perhaps chiefly, I hope this account can serve as a demonstration of my process (and dare I say aptitude) of not only trying my hand at a technology I had previously had little practical experience with, but succeeding in the endeavor.